In the field of user experience design, we research users and often talk about the user pain points we’ve observed. When I use pain here I don’t mean inconveniences, as when I can’t find just the right streaming music playlist to match my mood. I mean tasks that emotionally hurt, such as knowing you need to file a timesheet by a deadline but you can’t figure out how do that with the enterprise timesheet software you’re forced to use, to the point you are cursing the software.

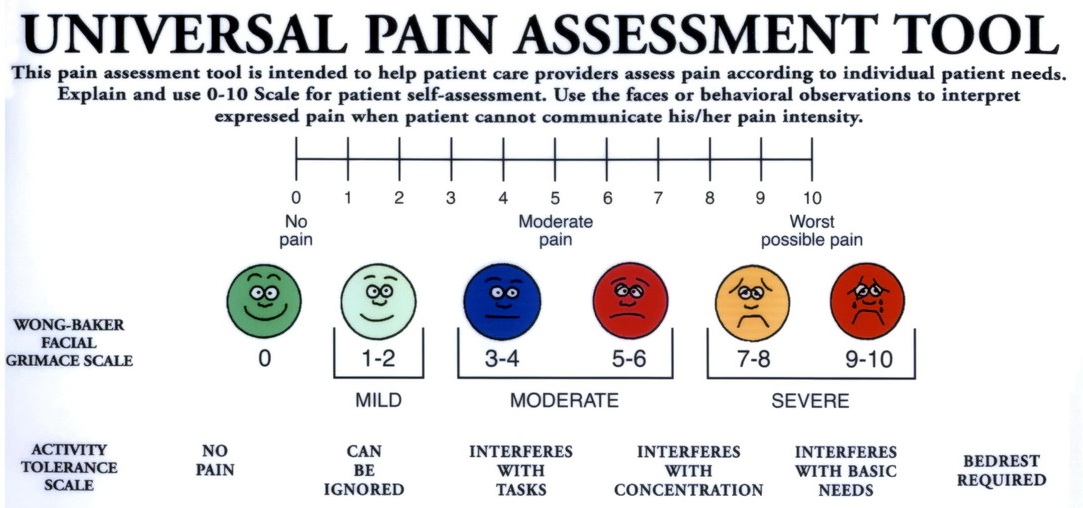

The phrase “pain points” implies discrete things or points in time. But in some cases users are interacting with a system on an ongoing basis and that pain is continuous. Usually when someone is in pain for an extended period we call it suffering. When it comes to our bodies we make this distinction between minor pain and suffering all the time. A minor pain we tolerate and wait for it to go away. When we’re suffering we go to the doctor.

When designing for users of software, differentiating between minor pain and suffering helps me make different choices about how to design solutions.

One consideration is how long to spend designing a solution. If we reduce either the pain or the time someone is in pain then we reduce the suffering. But this can involve a trade off: we can design an amazing solution that removes all users’ pain, but that could take a lot of time. We could design something and release it to users quickly, but it might only relieve part of their pain. Or we might find a middle road where we alleviate the worst pain first and then gradually alleviate the rest with subsequent releases.

Mathematically we can phrase it like this:

Pain x Time = Suffering

This tradeoff also helps me think about using the appropriate design methods. Let’s say for example that the scope of my problem is large, perhaps helping the U.S. government create a better way for residents to understand and file federal taxes. I would need a design methodology that can encompass many types of users, information, and interaction, such as service design. If preliminary research revealed that the most user suffering is in knowing how much to pay in estimated taxes, then I might focus on that problem. In order to alleviate that particular suffering quickly I would need a design methodology that helped me rapidly find a solution, such as Lean UX.

I’ll stretch this analogy further to illustrate how I think of allocating resources based on design needs. Near where I grew up is a large hospital system that offers patient care on different time horizons. One, the hospital has an emergency room to triage critical patient needs. Two, it has doctors who can provide scheduled, annual checkups. Three, it has specialists who provide larger procedures such as surgery. And four, it has research labs where it can develop new cures.

Now imagine there’s a tragic accident nearby. The hospital could allocate more resources to the emergence room to handle demand. Alternately, imagine a new disease is causing a pandemic; the hospital could allocate more resources to the research labs for find a cure.

Similarly, my team allocates resources depending on the kinds of problems our users have. One, we embed designers in agile feature teams to quickly alleviate critical user suffering. Two, we perform proactive testing to track how usability has changed over time. Three, we occasionally dedicate a team to a large change, such as an information architecture change. And four, we do research to arrive at overall design patterns such as infinite scrolling vs pagination. Our job titles and work assignments are fluid enough to respond to (constant) change.

All that to say:

- I try to be open to all methods and try not to see all problems as nails I can hit with my favorite design method hammer.

- When there is clear user suffering, I factor time into my choice of design method to alleviate suffering quickly.